|

|

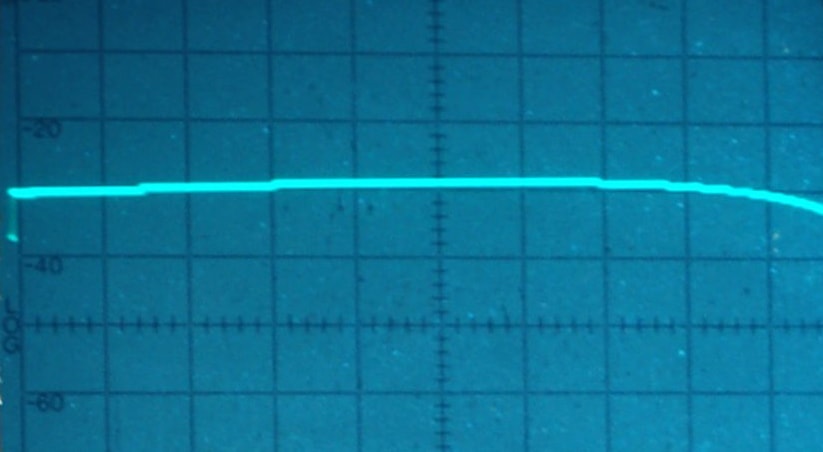

Excerpt from the original Western Electric 300B data sheet.>

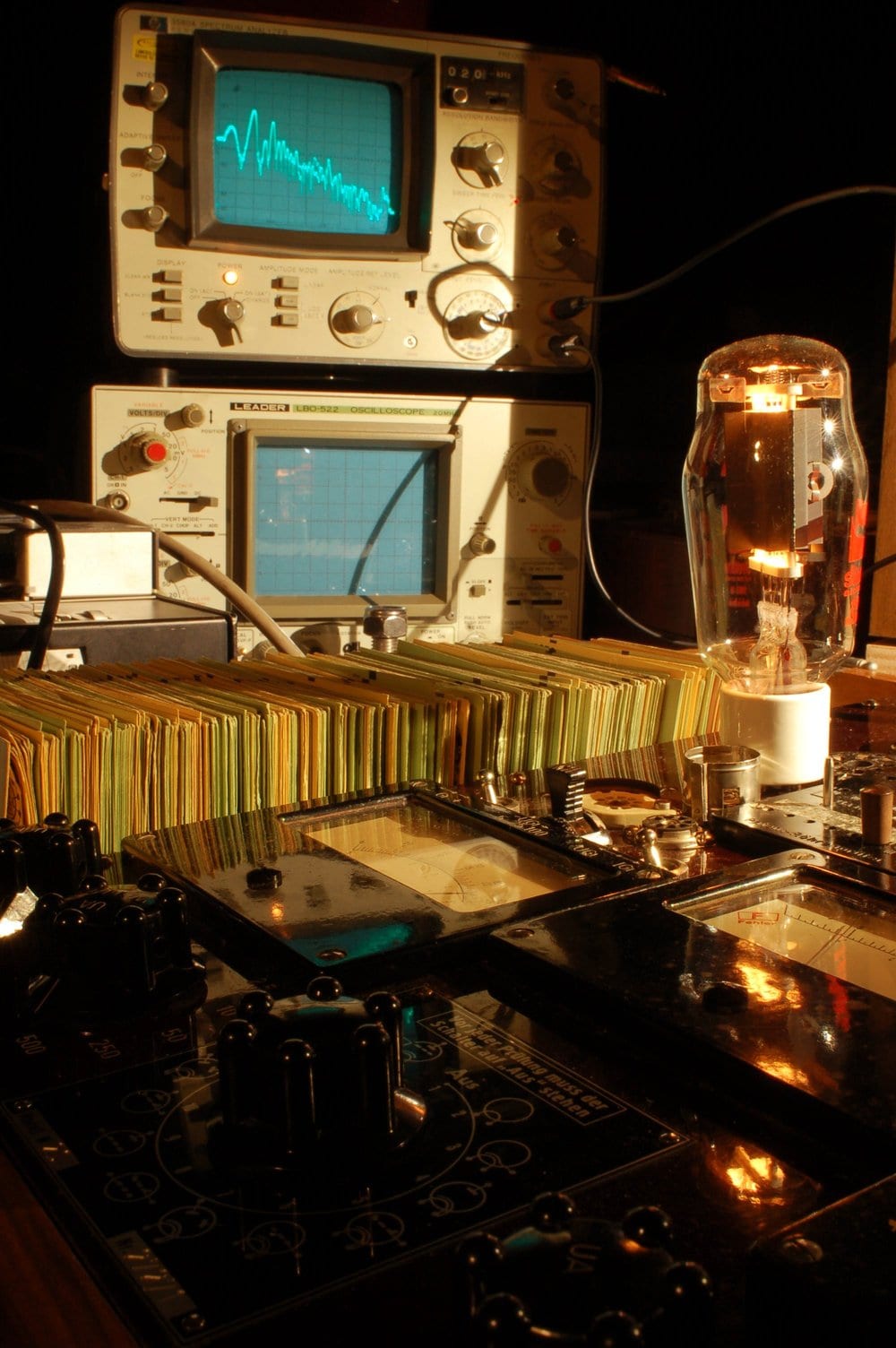

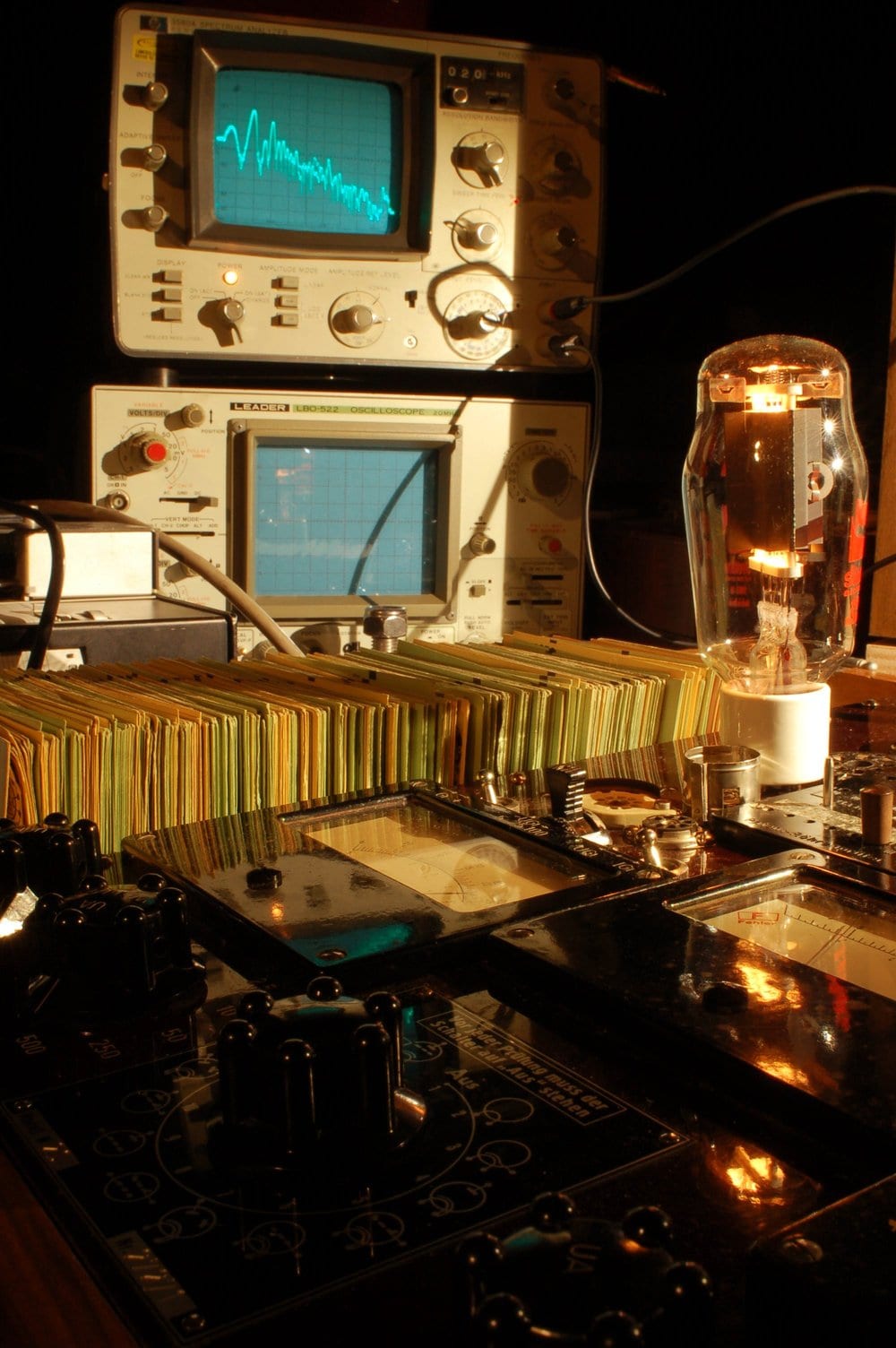

Excerpt from the original Western Electric 300B data sheet.> A transmitting triode tube in the lab, undergoing testing. Photo courtesy of Agnew Analog Reference Instruments.

A transmitting triode tube in the lab, undergoing testing. Photo courtesy of Agnew Analog Reference Instruments.

|

|

Excerpt from the original Western Electric 300B data sheet.>

Excerpt from the original Western Electric 300B data sheet.> A transmitting triode tube in the lab, undergoing testing. Photo courtesy of Agnew Analog Reference Instruments.

A transmitting triode tube in the lab, undergoing testing. Photo courtesy of Agnew Analog Reference Instruments.